What if your homelab could do more than just run services? What if it could actively understand, reason, and act on the data flowing through it? Imagine your server analyzing logs to predict failures before they happen, writing automation scripts from natural language requests, or explaining complex system issues in plain English.

This is the power of integrating AI agents and Large Language Models (LLMs) into your homelab infrastructure. An AI agent is a program that can perceive its environment, make decisions, and take actions autonomously. LLMs like ChatGPT provide the natural language understanding and generation capabilities that power these agents.

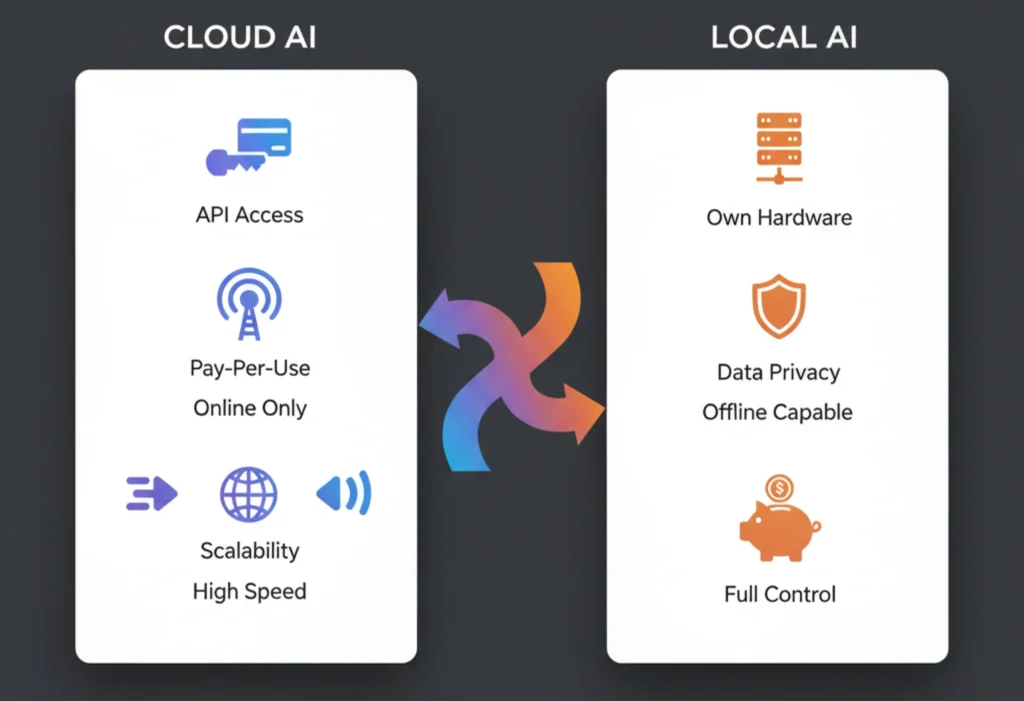

The central question for homelab automation enthusiasts is whether to use cloud-based APIs like ChatGPT API or run local LLMs on your own hardware. The answer depends on your priorities around privacy, cost, performance, and control.

In this comprehensive guide, you’ll learn to set up both endpoints, build practical AI agents for homelab automation, compare each approach’s pros and cons, and implement a hybrid strategy. By the end, you’ll have a working AI operations assistant that can monitor your infrastructure, analyze logs, and provide intelligent insights.

The Two Paths: ChatGPT API vs. Local LLMs

Integrating AI into your homelab presents two distinct paths with unique advantages and trade-offs.

The Cloud Path (ChatGPT API)

OpenAI API offers maximum intelligence with minimal setup. GPT-4o and GPT-4 models provide superior reasoning, code generation, and natural language understanding. Setup requires only an API key, with no local resource consumption or GPU requirements. Models update automatically with improvements.

However, this convenience has drawbacks: ongoing per-token charges that accumulate with usage, mandatory internet connectivity, privacy-focused AI concerns as queries leave your network, rate limits that throttle applications, and potential vendor lock-in.

The Local Path (Self-Hosted LLMs)

Self-hosted AI through tools like Ollama provides complete control. Running models like Llama 4, GPT-OSS, or DeepSeek V3.2 locally ensures maximum data privacy no information leaves your homelab. After the initial hardware investment, costs reduce to modest electricity expenses. Your AI assistant operates completely offline with no rate limits and total configuration control.

The trade-offs include significant hardware requirements: a capable GPU with 16GB+ VRAM, 32GB+ system RAM, and NVMe storage are recommended. Model quality, while improving rapidly, may not match GPT-4 for complex reasoning. Setup requires more technical expertise, and you must manually update models.

The Hybrid Approach

Rather than choosing exclusively, intelligently route different tasks to appropriate endpoints. Use local LLMs for privacy-sensitive data, high-volume repetitive tasks, and background automation. Reserve ChatGPT API for complex reasoning, creative tasks, and situations where maximum quality justifies the cost. This hybrid AI architecture provides the best of both worlds.

Prerequisites: Gearing Up Your Homelab for 2025

Before implementation, ensure your home server has the necessary software and hardware foundations for AI integration.

Software Requirements

Installing Docker and Docker Compose simplifies deployment and management. Basic Python knowledge (version 3.11+) is essential for integration scripts. For the cloud path, register for an OpenAI API account at platform.openai.com. Install Git for cloning example repositories.

Hardware Recommendations for 2025 Models

My Personal Setup & Performance Notes:

- RTX 4090 (24GB VRAM): Handles 70B models at Q4 quantization with 15-20 tokens/second

- RTX 4070 Ti (12GB VRAM): Perfect for 13B-34B models, 25-35 tokens/second

- RTX 3060 (12GB VRAM): Budget option, runs 7B-13B models at 18-25 tokens/second

For local LLM deployment, requirements have evolved with newer models:

- Entry-level (7B models): 16GB RAM, modern CPU, 8-12GB VRAM, 50GB SSD storage

- Mid-range (13B-34B models): 32GB+ RAM, NVIDIA GPU (RTX 4070 Ti+), 16GB+ VRAM, 100GB+ NVMe SSD

- High-end (70B+ models): 64GB+ RAM, RTX 4090 or enterprise GPU, 24GB+ VRAM, 500GB+ NVMe

2025 Model Size Guide:

- 7B parameter models: 4-8GB storage (Q4 quantization)

- 13B parameter models: 8-12GB storage

- 34B parameter models: 20-24GB storage

- 70B parameter models: 40-48GB storage

For ChatGPT API-focused deployments, hardware requirements remain minimal. Any setup running Python and making HTTPS requests works, including Raspberry Pi 5 with 8GB RAM.

Network Considerations

Ensure a stable internet for API calls using the cloud path. Configure internal network access for API endpoints. Consider implementing a reverse proxy like Nginx or Traefik for cross-network API exposure with SSL termination and access control.

Path One: Harnessing the Cloud with the ChatGPT API

The cloud approach offers the fastest path to sophisticated AI homelab capabilities.

Getting Your OpenAI API Key

Navigate to platform.openai.com and create an account. Generate a new API key in the API section. Understand the pricing model: GPT-4o costs approximately $2.50 per million input tokens and $10.00 per million output tokens, while GPT-4 Turbo remains more expensive. For homelab automation tasks, expect $10-50 monthly with moderate usage. Set usage limits in account settings to prevent surprise bills.

Your First API Call: A Simple Python Script

Install dependencies with pip install openai python-dotenv. This production-ready script demonstrates basic API integration:

import os

from openai import OpenAI

from dotenv import load_dotenv

# Load environment variables from .env file

load_dotenv()

# Initialize the OpenAI client

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

def query_chatgpt(prompt, model="gpt-4o"):

"""Send a prompt to ChatGPT and return the response."""

try:

response = client.chat.completions.create(

model=model,

messages=[

{"role": "system", "content": "You are a helpful homelab assistant."},

{"role": "user", "content": prompt}

],

temperature=0.7,

max_tokens=500

)

# Extract the response text

answer = response.choices[0].message.content

tokens_used = response.usage.total_tokens

print(f"Response: {answer}")

print(f"Tokens used: {tokens_used}")

return answer

except Exception as e:

print(f"Error: {e}")

return None

if __name__ == "__main__":

prompt = "Explain what Docker containers are in one sentence."

query_chatgpt(prompt)The script initializes the OpenAI client with your API key, sends structured messages, and handles token management. The temperature parameter controls randomness (0.7 is balanced), while max_tokens limits response length for cost control.

Security Callout: Protecting Your API Keys

Never hardcode API keys in scripts. Use environment variables: export OPENAI_API_KEY="your-key-here" in your shell. Create a .env file with OPENAI_API_KEY=your-key-here and use python-dotenv. For production, consider secrets management tools like Docker secrets or HashiCorp Vault. Add .env to .gitignore to prevent accidental commits. Rotate API keys every 90 days.

Implementation: Running Your First AI Script

Step-by-Step Setup:

- Create the project directory:

mkdir ~/homelab-ai && cd ~/homelab-ai- Create requirements.txt:

openai>=1.0.0

python-dotenv>=1.0.0- Create .env file:

echo "OPENAI_API_KEY=your-actual-key-here" > .env- Save the Python script as

chatgpt_agent.py(using the code from previous section) - Install dependencies and run:

pip install -r requirements.txt

python chatgpt_agent.pyExpected Output:

Response: Docker containers are lightweight, standalone, executable packages that include everything needed to run a piece of software.

Tokens used: 28Testing Different Prompts:

# Test with a homelab-specific question

python -c "

from chatgpt_agent import query_chatgpt

print(query_chatgpt('My Docker container keeps restarting. What are common causes?'))

"Running it in Your Homelab Environment

For containerized deployment, create a Dockerfile:

FROM python:3.12-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

CMD ["python", "chatgpt_agent.py"]Build and run:

docker build -t homelab-ai .

docker run -e OPENAI_API_KEY="your-key-here" homelab-aiFor native execution, create a systemd service /etc/systemd/system/homelab-ai.service:

[Unit]

Description=Homelab AI Assistant

After=network.target

[Service]

Type=simple

User=homelab

WorkingDirectory=/opt/homelab-ai

Environment="OPENAI_API_KEY=your-key-here"

ExecStart=/usr/bin/python3 /opt/homelab-ai/agent.py

Restart=on-failure

[Install]

WantedBy=multi-user.targetEnable and start the service:

sudo systemctl enable homelab-ai.service

sudo systemctl start homelab-ai.service

sudo systemctl status homelab-ai.serviceImplement exponential backoff for 429 rate limit errors, wait 1 second, then 2, then 4, up to a maximum retry limit.

Path Two: The Sovereign Stack with Local LLMs (Ollama + Alternatives)

For those valuing privacy, control, and long-term cost efficiency, self-hosted AI is the ultimate solution.

Why Ollama and Alternatives?

Ollama remains the easiest entry point for local LLM deployment, handling model management automatically. However, for 2025, consider these alternatives:

- text-generation-webui: Power-user favorite with extensive extensions

- LocalAI: Drop-in OpenAI API replacement, ideal for production

- LM Studio: User-friendly desktop application with server capabilities

Ollama provides an OpenAI-compatible REST API, enabling seamless switching between local and cloud endpoints. Underneath, it uses optimized inference engines for excellent performance on consumer hardware.

Installing Ollama via Docker

For production-ready Docker AI deployment with GPU support, use docker-compose.yml:

version: '3.8'

services:

ollama:

image: ollama/ollama:latest

container_name: ollama

ports:

- "11434:11434"

volumes:

- ollama_data:/root/.ollama

environment:

- OLLAMA_HOST=0.0.0.0

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu]

restart: unless-stopped

volumes:

ollama_data:For NVIDIA CUDA homelab passthrough, install nvidia-container-toolkit on the host system. Verify GPU access with docker run --rm --gpus all nvidia/cuda:12.6-base nvidia-smi. The volume mount ensures model files persist between container restarts.

Implementation: Setting Up Your Local AI Endpoint

Step 1: Launch Ollama with Docker

# Create the directory structure

mkdir -p ~/homelab-ai/ollama

cd ~/homelab-ai/ollama

# Create docker-compose.yml (using the code from previous section)

# Start the service

docker-compose up -d

# Check if it's running

docker ps | grep ollamaStep 2: Download Your First Model

# Pull a small model to test (2-3 minute download)

docker exec ollama ollama pull llama3.2:3b

# Verify the model downloaded

docker exec ollama ollama listStep 3: Test the Local API

Create test_local_ai.py:

import requests

import json

def test_ollama():

response = requests.post(

'http://localhost:11434/api/generate',

json={

'model': 'llama3.2:3b',

'prompt': 'Why are you useful for homelab administration?',

'stream': False

}

)

return response.json()

print(test_ollama())Run the test:

python test_local_ai.pyPulling and Running 2025’s Best Models

Start with modern models: docker exec -it ollama ollama pull llama3.2:3b-instruct-q4_K_M. For 2025, these models deliver excellent performance:

Recommended 2025 Models:

- Lightweight:

Llama 3.2 3B– Fast, efficient for simple tasks - Balanced:

DeepSeek-V3.2 7B– Advanced reasoning capabilities - Powerful:

GPT-OSS 13B– GPT-4 level performance, open-weight - Multimodal:

Qwen3-Omni 14B– Handles text, images, audio, video

Performance Benchmarks (RTX 4090):

- Llama 3.2 3B: 45 tokens/second, response time: 1.2s

- DeepSeek-V3.2 7B: 28 tokens/second, response time: 2.1s

- GPT-OSS 13B: 18 tokens/second, response time: 3.4s

- GPT-4o (API): N/A tokens/second, response time: 1.8s

Test with interactive chat: docker exec -it ollama ollama run deepseek-v3.2:7b.

Understanding Model Quantization

Model quantization reduces size and memory requirements using lower-precision numbers:

- Q2: 2-bit (smallest, fastest, lowest quality)

- Q4: 4-bit (balanced quality/size)

- Q6: 6-bit (high quality, larger size)

- Q8: 8-bit (very high quality, large size)

For most homelab applications, Q4 or Q6 quantization provides the best balance.

Essential Model Management

ollama list: View installed models and sizesollama rm model:tag: Remove models to free disk spaceollama show model:tag: Display detailed model informationollama serve: Start the API server manually

Using the Local API

Ollama exposes an OpenAI-compatible REST API at http://localhost:11434/v1/chat/completions. Modify the previous ChatGPT script by changing the base URL:

import os

from openai import OpenAI

# Point to local Ollama instance

client = OpenAI(

base_url="http://localhost:11434/v1",

api_key="ollama" # Ollama doesn't require real API key

)

def query_local_llm(prompt, model="deepseek-v3.2:7b"):

"""Query local Ollama model."""

try:

response = client.chat.completions.create(

model=model,

messages=[

{"role": "system", "content": "You are a helpful homelab assistant."},

{"role": "user", "content": prompt}

]

)

return response.choices[0].message.content

except Exception as e:

print(f"Error: {e}")

return None

# Test the local setup

if __name__ == "__main__":

response = query_local_llm("What's the advantage of running AI locally?")

print(response)Test the API with curl:

curl http://localhost:11434/v1/chat/completions -H "Content-Type: application/json" -d '{"model": "deepseek-v3.2:7b", "messages": [{"role": "user", "content": "Hello!"}]}'Implementation: Complete Local AI Setup Script

Create setup_local_ai.sh:

#!/bin/bash

# Setup script for local AI homelab

echo "Setting up Local AI Homelab..."

# Create directories

mkdir -p ~/homelab-ai/{scripts,models,config}

# Create docker-compose for Ollama

cat > ~/homelab-ai/docker-compose.yml << EOF

version: '3.8'

services:

ollama:

image: ollama/ollama:latest

container_name: ollama

ports:

- "11434:11434"

volumes:

- ./models:/root/.ollama

environment:

- OLLAMA_HOST=0.0.0.0

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu]

restart: unless-stopped

EOF

# Start services

cd ~/homelab-ai

docker-compose up -d

echo "Waiting for Ollama to start..."

sleep 10

# Download recommended models

echo "Downloading models..."

docker exec ollama ollama pull llama3.2:3b

docker exec ollama ollama pull deepseek-v3.2:7b

echo "Setup complete! Test with:"

echo "docker exec ollama ollama list"

echo "curl http://localhost:11434/api/tags"Make executable and run:

chmod +x setup_local_ai.sh

./setup_local_ai.shCommon Gotchas & Troubleshooting

GPU not detected: Run nvidia-smi on the host to verify NVIDIA drivers. Check nvidia-container-toolkit installation. Verify Docker GPU access with docker run --rm --gpus all nvidia/cuda:12.6-base nvidia-smi.

Out of memory errors: Switch to smaller models or aggressive quantization. A 70B model at Q4 requires 40GB+ RAM. Monitor memory with docker stats.

Slow inference: Verify GPU inference utilization with watch -n 1 nvidia-smi. CPU-only inference is 10-50x slower than GPU inference.

Model loading failures: Check disk space with df -h. Each model requires 4-50GB depending on size and quantization. Check Ollama logs: docker logs ollama.

Port conflicts: Change port mapping in docker-compose.yml to 11435:11434 if 11434 is occupied.

Building Your First AI Agent: A Homelab Operations Assistant

Create a practical intelligent agent that monitors homelab infrastructure and explains status in natural language.

The Concept

Your homelab runs dozens of services, Docker containers, systemd services, and databases. Checking status typically involves parsing cryptic command outputs. Your AI agent can read these outputs, understand them, and provide plain-English summaries: “All 12 containers are running healthy. Nginx has been up for 14 days. PostgreSQL is consuming 2.3GB RAM, which is normal.”

The Plan

The agent will execute system commands to gather infrastructure data, feed raw output to an LLM (local Ollama or ChatGPT API), receive natural language analysis, and make this configurable for easy endpoint switching. Add scheduling for automated reports.

Implementation: Complete Homelab Agent Setup

Step 1: Create the Project Structure

mkdir -p ~/homelab-ai-agent/{scripts,config,logs}

cd ~/homelab-ai-agentStep 2: Create requirements.txt

openai>=1.0.0

python-dotenv>=1.0.0

requests>=2.25.0

psutil>=5.9.0Step 3: Create Configuration File

Create config/agent_config.json:

{

"local_models": {

"fast": "llama3.2:3b",

"balanced": "deepseek-v3.2:7b",

"powerful": "gpt-oss:13b"

},

"check_intervals": {

"docker": 300,

"resources": 600,

"full_system": 3600

},

"alert_thresholds": {

"cpu_percent": 80,

"memory_percent": 85,

"disk_percent": 90

}

}The Code: Complete Production-Ready Script

Create scripts/homelab_agent.py:

#!/usr/bin/env python3

import subprocess

import os

from openai import OpenAI

from dotenv import load_dotenv

import argparse

import time

import json

import psutil

# Load environment variables

load_dotenv()

class HomelabAgent:

"""Intelligent homelab operations assistant."""

def __init__(self, use_local=False, model=None):

"""Initialize agent with local or cloud LLM."""

if use_local:

self.client = OpenAI(

base_url="http://localhost:11434/v1",

api_key="ollama"

)

self.model = model or "deepseek-v3.2:7b"

self.endpoint = "Local Ollama"

else:

self.client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

self.model = model or "gpt-4o"

self.endpoint = "ChatGPT API"

def execute_command(self, command):

"""Execute system command and return output."""

try:

result = subprocess.run(

command,

shell=True,

capture_output=True,

text=True,

timeout=30

)

return result.stdout if result.returncode == 0 else f"Error: {result.stderr}"

except subprocess.TimeoutExpired:

return "Command timed out after 30 seconds"

except Exception as e:

return f"Error executing command: {e}"

def analyze_with_llm(self, system_data, max_retries=3):

"""Send system data to LLM for analysis."""

prompt = f"""Analyze this homelab system output and provide a concise summary:

{system_data}

Please provide:

1. Overall health status (Healthy/Warning/Critical)

2. Key findings in bullet points

3. Any recommendations for optimization or issues to address

Keep the response under 200 words."""

for attempt in range(max_retries):

try:

response = self.client.chat.completions.create(

model=self.model,

messages=[

{"role": "system", "content": "You are an expert DevOps engineer analyzing homelab infrastructure."},

{"role": "user", "content": prompt}

],

temperature=0.3,

max_tokens=400

)

return response.choices[0].message.content

except Exception as e:

if attempt < max_retries - 1:

wait_time = 2 ** attempt

print(f"Error on attempt {attempt + 1}, retrying in {wait_time}s: {e}")

time.sleep(wait_time)

else:

return f"Failed to get LLM analysis after {max_retries} attempts: {e}"

def check_docker_containers(self):

"""Monitor Docker container status."""

print(f"\n{'='*60}")

print(f"Homelab Operations Report - Using {self.endpoint}")

print(f"Model: {self.model}")

print(f"{'='*60}\n")

docker_output = self.execute_command("docker ps --format 'table {{.Names}}\t{{.Status}}\t{{.Image}}'")

print("Raw Docker Output:")

print("-" * 60)

print(docker_output)

print("-" * 60)

print("\nAI Analysis:")

print("-" * 60)

analysis = self.analyze_with_llm(docker_output)

print(analysis)

print("-" * 60)

def check_system_resources(self):

"""Monitor system resource usage."""

commands = {

"Disk Usage": "df -h / /home /opt",

"Memory Usage": "free -h",

"CPU Load": "uptime",

"Temperature": "sensors 2>/dev/null || echo 'No sensor data available'"

}

combined_output = ""

for label, command in commands.items():

output = self.execute_command(command)

combined_output += f"\n{label}:\n{output}\n"

print(f"\n{'='*60}")

print("System Resources Analysis")

print(f"{'='*60}\n")

analysis = self.analyze_with_llm(combined_output)

print(analysis)

print("-" * 60)

def main():

parser = argparse.ArgumentParser(description="Homelab AI Operations Assistant")

parser.add_argument("--local", action="store_true", help="Use local Ollama instead of ChatGPT API")

parser.add_argument("--model", help="Specific model to use (e.g., 'deepseek-v3.2:7b' or 'gpt-4o')")

parser.add_argument("--check", choices=["docker", "resources", "all"], default="docker",

help="What to check (default: docker)")

args = parser.parse_args()

agent = HomelabAgent(use_local=args.local, model=args.model)

if args.check in ["docker", "all"]:

agent.check_docker_containers()

if args.check in ["resources", "all"]:

agent.check_system_resources()

if __name__ == "__main__":

main()Implementation: Running Your Homelab Agent

Step 4: Test the Agent

# Install dependencies

pip install -r requirements.txt

# Test with cloud API

python scripts/homelab_agent.py --check docker

# Test with local LLM

python scripts/homelab_agent.py --local --model llama3.2:3b --check all

# Test with specific model

python scripts/homelab_agent.py --local --model deepseek-v3.2:7b --check resourcesStep 5: Create a Systemd Service

Create /etc/systemd/system/homelab-agent.service:

[Unit]

Description=Homelab AI Monitoring Agent

After=network.target docker.service

[Service]

Type=exec

User=homelab

WorkingDirectory=/home/homelab/homelab-ai-agent

Environment=PYTHONPATH=/home/homelab/homelab-ai-agent

ExecStart=/usr/bin/python3 /home/homelab/homelab-ai-agent/scripts/homelab_agent.py --local --check all

Restart=on-failure

RestartSec=30

[Install]

WantedBy=multi-user.targetStep 6: Create a Cron Job for Regular Monitoring

# Add to crontab -e

# Run every 30 minutes

*/30 * * * * /usr/bin/python3 /home/homelab/homelab-ai-agent/scripts/homelab_agent.py --local --check all >> /home/homelab/homelab-ai-agent/logs/monitoring.log 2>&1

# Run comprehensive check every 6 hours

0 */6 * * * /usr/bin/python3 /home/homelab/homelab-ai-agent/scripts/homelab_agent.py --local --check all --model deepseek-v3.2:7b >> /home/homelab/homelab-ai-agent/logs/comprehensive.log 2>&1Prompt Engineering Tips

Structure prompts with clear sections and specific questions. Request formatted output like bullet points for consistency. Include role context in system messages. “You are an expert DevOps engineer” yields better technical analysis. Use lower temperature values (0.3-0.5) for factual analysis to reduce creativity and hallucinations.

Example Output

Running python homelab_agent.py --local --model deepseek-v3.2:7b produces:

============================================================

Homelab Operations Report - Using Local Ollama

Model: deepseek-v3.2:7b

============================================================

Raw Docker Output:

------------------------------------------------------------

NAMES STATUS IMAGE

nginx Up 14 days nginx:latest

postgres Up 14 days postgres:15

redis Up 2 hours redis:7

portainer Up 14 days portainer/portainer-ce

------------------------------------------------------------

AI Analysis:

------------------------------------------------------------

**Overall Health Status: Healthy**

Key Findings:

• All 4 containers are running without issues

• Nginx, PostgreSQL, and Portainer show excellent uptime (14 days)

• Redis was recently restarted 2 hours ago - monitor for stability

• No crashed or restarting containers detected

Recommendations:

• Investigate why Redis restarted - check logs with `docker logs redis`

• Consider implementing health checks for all containers

• Current container stability is excellent overall

------------------------------------------------------------The AI transforms technical command output into actionable insights understandable to non-experts.

Beyond Docker: Expanding the Agent’s Capabilities

Systemctl monitoring: Add self.execute_command("systemctl status nginx.service") to check native services. The LLM parses whether services are active, failed, or disabled and explains their purposes.

Kubernetes integration: For K3s or microk8s, execute kubectl get pods --all-namespaces and have the AI summarize pod health across namespaces.

Log file analysis: Read recent logs with tail -n 100 /var/log/syslog and ask the LLM to identify errors, warnings, or anomalies.

Resource monitoring: Install the psutil Python library for detailed CPU, RAM, and disk metrics beyond shell command parsing.

Network diagnostics: Parse netstat -tlnp or iptables -L output to identify open ports, suspicious connections, or firewall misconfigurations.

Multi-service dashboard: Combine multiple checks into comprehensive daily reports covering Docker containers, system services, resource usage, log anomalies, and network status.

Making it Production-Ready

Scheduling Options:

Use cron for basic scheduling: crontab -e and add 0 * * * * /usr/bin/python3 /opt/homelab-ai/agent.py --local --check all >> /var/log/homelab-ai.log 2>&1 for hourly execution.

For robust scheduling, create a systemd AI service timer /etc/systemd/system/homelab-agent.timer:

[Unit]

Description=Homelab AI Agent Timer

[Timer]

OnBootSec=5min

OnUnitActiveSec=1h

[Install]

WantedBy=timers.targetPackage the agent as a Docker container with APScheduler for self-contained deployment.

Notification Integration:

Send reports via email using smtplib, post to Discord using webhooks, integrate with Slack channels, or use Gotify/Ntfy for mobile push notifications. Implement conditional alerts that only notify when the LLM detects “Warning” or “Critical” states to prevent alert fatigue.

Head-to-Head Comparison & Strategic Use Cases

Objectively compare both approaches and identify optimal use cases.

Comprehensive Comparison Table

| Factor | ChatGPT API | Local LLM | Hybrid |

|---|---|---|---|

| Privacy | Lower (data leaves network) | Maximum (nothing leaves homelab) | Configurable per task |

| Monthly Cost | $10-$100+ (usage-based) | $5-20 electricity | $15-50 total |

| Upfront Cost | $0 | $800-$2500 (hardware) | $800-$2500 |

| Performance | Best (GPT-4o/GPT-4) | Good-Excellent | Best of both |

| Setup Time | 15 minutes | 1-3 hours | 2-4 hours |

| Latency | 1-3 seconds | 0.5-8 seconds | Variable |

| Offline Operation | No | Yes | Partial |

| Rate Limits | Yes | No | Minimal |

| Model Updates | Automatic | Manual | Mixed |

| Scaling | Unlimited (pay more) | Limited by hardware | Flexible |

Total Cost of Ownership Analysis for 2025

Three-Year Projection:

Year 1:

- Cloud-only: $360-$1200 API fees (moderate-heavy usage)

- Local-only: $1500 hardware + $120 electricity = $1620

- Hybrid: $1500 hardware + $300 combined = $1800

Year 2:

- Cloud-only: $360-$1200

- Local-only: $120 electricity

- Hybrid: $240 combined

Year 3:

- Cloud-only: $360-$1200

- Local-only: $120 electricity

- Hybrid: $240 combined

Break-even analysis: Local infrastructure pays for itself after 12-20 months of moderate to heavy usage. After three years, cloud-only costs $1080-$3600, local costs $1860, and hybrid costs $2280. For high-volume applications, local becomes significantly cheaper over time.

Recommended Use Cases

Use ChatGPT API for:

Complex reasoning requiring multi-step logic or advanced problem-solving. Code generation and debugging, especially for complex algorithms. Creative writing and content generation where nuance matters. Occasional usage patterns where $20-50 monthly justifies avoiding hardware investment. Client-facing applications needing maximum quality. Situations requiring latest capabilities through automatic updates.

Use Local LLMs for:

Privacy-focused AI analyzing sensitive data logs with credentials, internal documentation, or GDPR/HIPAA-regulated information. Internal documentation Q&A systems requiring on-premises data retention. High-volume repetitive tasks like generating thousands of log summaries where API costs would be prohibitive. 24/7 background agents continuously monitoring your homelab. Learning and experimentation with thousands of test queries. Compliance-regulated environments prohibiting third-party APIs. Offline AI assistant scenarios like remote locations with unreliable internet.

The Hybrid Strategy: Intelligent Routing Implementation

The most sophisticated approach combines both, routing queries based on their characteristics.

Architecture Overview:

User Request → Router Logic → [Local LLM or Cloud API] → Response

The router evaluates each query and selects the optimal endpoint.

Routing Rules:

Privacy filter: Scan for sensitive keywords like “password”, “token”, “credential”—route these exclusively to local LLMs.

Complexity assessment: Simple queries like “summarize this log” use local models. Complex queries like “analyze this architecture” use GPT-4o.

Quality fallback: Attempt local inference first, retry with cloud API if confidence is low.

Cost optimization: Track cumulative API spending, switch to local models when approaching budget limits.

Implementation Code Snippet:

class HybridRouter:

"""Intelligent query router for hybrid LLM setup."""

SENSITIVE_KEYWORDS = ["password", "token", "key", "secret", "credential", "ssn", "credit"]

def __init__(self, monthly_budget=50.0):

self.local_client = OpenAI(base_url="http://localhost:11434/v1", api_key="ollama")

self.cloud_client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

self.monthly_budget = monthly_budget

self.current_spend = 0.0

self.local_model = "deepseek-v3.2:7b"

self.cloud_model = "gpt-4o"

def route_query(self, prompt):

"""Intelligently route query to best endpoint."""

# Privacy check - force local if sensitive

if any(keyword in prompt.lower() for keyword in self.SENSITIVE_KEYWORDS):

print("Routing to local LLM: Privacy-sensitive content")

return self.query_local(prompt)

# Budget check - use local if approaching limit

if self.current_spend >= self.monthly_budget * 0.9:

print("Routing to local LLM: Approaching budget limit")

return self.query_local(prompt)

# Complexity assessment - simple queries go local

if len(prompt.split()) < 30 and not self._requires_complex_reasoning(prompt):

print("Routing to local LLM: Simple query")

return self.query_local(prompt)

# Default to cloud for complex queries

print("Routing to ChatGPT API: Complex query")

return self.query_cloud(prompt)

def _requires_complex_reasoning(self, prompt):

"""Determine if prompt requires complex reasoning."""

complex_indicators = ["analyze", "reason", "complex", "strategy", "design", "architecture"]

return any(indicator in prompt.lower() for indicator in complex_indicators)

def query_local(self, prompt):

"""Query local Ollama instance."""

try:

response = self.local_client.chat.completions.create(

model=self.local_model,

messages=[{"role": "user", "content": prompt}],

temperature=0.3

)

return response.choices[0].message.content

except Exception as e:

print(f"Local LLM failed, falling back to cloud: {e}")

return self.query_cloud(prompt)

def query_cloud(self, prompt):

"""Query ChatGPT API and track costs."""

try:

response = self.cloud_client.chat.completions.create(

model=self.cloud_model,

messages=[{"role": "user", "content": prompt}],

max_tokens=500

)

# Estimate cost: ~$2.50 per 1M input tokens, $10.00 per 1M output

tokens = response.usage.total_tokens

cost = (tokens / 1_000_000) * 6.25 # Conservative estimate

self.current_spend += cost

return response.choices[0].message.content

except Exception as e:

print(f"Cloud API failed: {e}")

return "Error: All AI endpoints unavailable"Hybrid Benefits:

Routing 70-80% of queries to local models achieves major cost savings potentially reducing API bills from $100+ to $20-30 monthly. The critical 20-30% of complex queries still use cloud APIs for maximum quality. Privacy is guaranteed for sensitive data through local-only processing.

Lessons Learned: Mistakes I Made So You Don’t Have To

The GPU Memory Trap: I initially tried running 70B models on a 12GB GPU. The result? Constant crashes and frustration.

Lesson: Match your model size to your VRAM. A 7B model running smoothly beats a 70B model crashing constantly.

The API Cost Surprise: My first month with heavy ChatGPT API usage resulted in a $180 bill.

Lesson: Always implement budget tracking and usage limits from day one.

The Model Chasing Cycle: I wasted weeks constantly downloading new models instead of sticking with one and mastering its capabilities.

Lesson: Pick 2-3 reliable models and learn their strengths rather than chasing every new release.

The Over-Engineering Mistake: I built an elaborate multi-agent system before I had basic monitoring working.

Lesson: Start simple, solve one problem well, then expand.

The Backup Oversight: I lost my carefully crafted prompts and agent configurations when my SSD failed.

Lesson: Version control everything prompts, configurations, and scripts belong in Git.

Next Steps: Leveling Up Your AI Homelab for 2025

Explore advanced concepts to enhance your AI homelab capabilities.

Advanced Concepts

RAG (Retrieval-Augmented Generation):

Connect LLMs to external knowledge bases using vector databases like Chroma, Weaviate, or Qdrant. Use frameworks like LangChain homelab or LlamaIndex for personal knowledge base AI that can search your documentation, wikis, or homelab logs.

Multi-Agent Systems:

Create multiple specialized agents working together using frameworks like AutoGen, CrewAI, or LangGraph. Implement automated incident response with researcher, analyzer, writer, and critic agents collaborating.

Home Assistant AI Integration:

Develop natural language interfaces for Home Assistant. Implement predictive maintenance via sensor data analysis. Create log analysis systems providing optimization suggestions.

Model Fine-Tuning & Customization:

Adapt models to your specific homelab terminology using tools like Axolotl, Unsloth, or LoRA/QLoRA. Fine-tune with 100-1000 examples of your typical queries and responses.

Advanced Monitoring & Observability:

Track LLM performance metrics with Prometheus + Grafana dashboards. Monitor response time, token usage, and costs across both local and cloud endpoints.

2025 AI Homelab Stack

Build a complete self-hosted AI ecosystem:

# docker-compose.full-stack.yml

version: '3.8'

services:

ollama:

image: ollama/ollama:latest

# ... ollama configuration

open-webui:

image: ghcr.io/open-webui/open-webui:latest

ports: ["3000:8080"]

environment:

- OLLAMA_BASE_URL=http://ollama:11434

depends_on:

- ollama

chroma-db:

image: chromadb/chroma:latest

ports: ["8000:8000"]

homelab-agent:

build: ./homelab-agent

environment:

- OLLAMA_HOST=ollama

- CHROMA_HOST=chroma-db

depends_on:

- ollama

- chroma-dbContinuous Learning & Community

Subscribe to r/LocalLLaMA, r/homelab, and r/selfhosted for latest developments. Join Ollama and LocalLLaMA Discord communities. Contribute scripts on GitHub and document your homelab automation journey.

Conclusion: The Future is Automated and Local (If You Want)

You now have the foundation to integrate AI into your homelab with the right tool for each job. The power lies in choice not cloud versus local, but cloud AND local intelligently combined.

Digital sovereignty means your data, your models, your rules. As models become smaller and smarter, hardware more affordable, and tools easier to use, the homelab evolution continues from storage to services to intelligence.

Start small with a basic ChatGPT API script or Ollama Docker setup. Iterate based on your specific needs.

The best homelab works for you. Let AI handle the mundane, so you can focus on the interesting. Your self-hosted ChatGPT alternative isn’t just a technical achievement it’s a step toward truly intelligent infrastructure that understands and serves your needs.

Final Recommendation: Begin with the hybrid approach use cloud APIs for exploration and local models for production tasks. As your needs grow, shift more workloads to local infrastructure. The ROI isn’t just financial it’s about building systems that truly work for you, on your terms.