In my previous post, How I Automated My Homelab with ChatGPT-5. I used ChatGPT-5 to generate scripts to monitor my homelab setup, with real-time system failure alerts sent directly to my Slack app. This gave me instant visibility into what was happening in my homelab. With alerts in place, I proceed with automating the fixing of identified problems. In this post, the focus will be on how I automated fixes in my homelab with ChatGPT-5. This serves as a continuation of the previous post.

Getting notified about issues is the first step forward. It turned out that many of the issues my homelab was experiencing frequently were problems that could be easily fixed via automation. Docker containers crashing due to memory leaks, disk space filling up, network connectivity hiccups, or VM resource constraints. I often found myself applying the same fixes repeatedly and restarting services, clearing logs, reallocating resources.

The solution was to run automated scripts on the server that help with the minor issues. Instead of repeating the same fixes over and over, I decided to automate my homelab using ChatGPT-5. I developed an automated fix framework that acts as a triage system. When an issue is detected, the system first determines if it can be safely resolved automatically by restarting a crashed container or clearing temporary files, or if it requires human intervention, like handling hardware failures or complex configuration issues.

The goal is a homelab that can self-heal common ailments, including restarting a frozen Docker container, reclaiming disk space from a log file, or rebooting a unresponsive VM, freeing me to focus on the bigger and more interesting problems.

The Framework for Automation

The leap to automated fixes in my homelab with ChatGPT-5 required a robust, intelligent, and safe framework. The goal wasn’t to build a reckless “auto-clicker” that blindly executes commands, but rather a thoughtful system that could diagnose issues, determine the right course of action, and execute pre-approved fixes, all while maintaining system integrity.

Tools & Workflow

At its core, ChatGPT-5 acts as the decision-making engine, accessed via API calls embedded in Python scripts. These scripts analyse system states, logs, and alerts, then determine the safest response. The actual execution layer is powered by Bash and Python scripts, with Ansible managing multi-step orchestration across Docker containers and VMs, and cron handling lightweight, time-based checks.

The workflow follows a structured four-stage pipeline:

- Detection → The monitoring agent from Part 1 identifies an issue (e.g., “Nextcloud container is down”) and sends an alert to Slack.

- Analysis → A listener process captures the Slack alert and forwards the relevant details to the ChatGPT-5 API, which evaluates logs, resource usage, and error patterns to classify the issue.

- Fix → Depending on the analysis, the system either:

- Executes a pre-written remediation script for known, safe issues.

- Requests human approval before proceeding with potentially risky actions.

- Escalates directly if human intervention is mandatory.

- Verification → After a fix is applied, a follow-up health check verifies that the issue is resolved, and a detailed report is sent back to Slack for transparency.

This pipeline transforms my homelab into a proactive, intelligent problem-solving system.

Error Categorisation System

At the heart of the framework is an intelligent error categorisation system. Every detected issue is sorted into one of three categories:

- Safe-to-Automate → Low-risk, routine problems are handled instantly. Examples include restarting a crashed Docker container, clearing a full

/tmpdirectory, or rebooting an unresponsive VM. - Requires Approval → Actions with a potential system-wide impact, such as applying configuration changes, running updates, or modifying firewall rules, are queued for review. The system sends me a Slack notification containing the proposed fix and a one-click “Approve” button.

- Human-Only → Critical issues like suspected hardware failures, potential security breaches, or data integrity problems bypass automation entirely and trigger immediate admin alerts.

This tiered approach ensures automation remains safe, predictable, and controlled.

Safety Nets

Automating fixes without safeguards is a recipe for disaster, so the framework incorporates multiple layers of protection:

- Backups are sacrosanct → They’re never touched by automated scripts.

- Dry-run modes → New scripts first simulate actions without making changes, logging intended operations for review.

- Idempotent scripts → Fixes are designed to be safe to rerun without side effects.

- Approval workflows → Significant changes always require human confirmation.

- Audit trails → Every automated action is logged with full context for easy debugging and historical tracking.

These safety nets allow me to trust automation without surrendering control.

By combining ChatGPT-5’s decision-making capabilities with a structured workflow, intelligent error categorisation, and multiple safeguards, this framework turns my homelab into a self-healing infrastructure. Routine issues are resolved instantly, risky operations are reviewed before execution, and critical problems are escalated to me only when necessary.

This isn’t just automation, it’s intelligent, responsible automation.

Implementation of the Homelab Automation system

I will focus more on the scripts I use to automate my homelab.

Step 1: System Setup and Prerequisites

Create the directory structure and install dependencies:

# Create directory structure

sudo mkdir -p /opt/homelab-ai/{scripts,config,logs,backups,snapshots}

sudo chown $USER:$USER /opt/homelab-ai -R

chmod 755 /opt/homelab-ai

# Install system dependencies

sudo apt update && sudo apt install python3-pip python3-venv curl jq

# Set up Python virtual environment

cd /opt/homelab-ai

python3 -m venv venv

source venv/bin/activate

pip install openai requests pathlib python-dotenv psutilStep 2: Configuration Setup

Create the environment configuration:

Note: the OPENAI_API_KEY and SLACK_WEBHOOK_URL should be replaced with your actual api key and Slack URL. Get your Slack URL from here

# Install encryption dependencies

sudo apt install gnupg2

# Create encrypted configuration

cat > /opt/homelab-ai/config/secrets.txt << 'EOF'

OPENAI_API_KEY=your_api_key_here

SLACK_WEBHOOK_URL=https://hooks.slack.com/services/YOUR/SLACK/WEBHOOK

EOF

# Encrypt the secrets file

gpg --symmetric --cipher-algo AES256 /opt/homelab-ai/config/secrets.txt

mv /opt/homelab-ai/config/secrets.txt.gpg /opt/homelab-ai/config/.secrets.enc

# Remove plaintext file

rm /opt/homelab-ai/config/secrets.txt

# Create regular configuration

cat > /opt/homelab-ai/config/.env << 'EOF'

# System Parameters (non-sensitive)

MAX_RESTARTS=3

DISK_CRITICAL_PERCENT=90

DISK_WARNING_PERCENT=80

LOG_RETENTION_DAYS=14

NETWORK_INTERFACE=ens18 # Change to your interface (use 'ip a' to find)

BACKUP_RETENTION_DAYS=7

CONTAINER_RESTART_COOLDOWN=300 # 5 minutes between restarts

AUTO_MODEL_DETECTION=true

PREFERRED_MODEL_PRIORITY=gpt-4,gpt-3.5-turbo,gpt-3.5-turbo-16k

EOFCreate Decryption Helper Script

cat > /opt/homelab-ai/scripts/decrypt_secrets.py << 'EOF'

#!/usr/bin/env python3

"""

Secure secrets decryption utility

"""

import subprocess

import os

import tempfile

from pathlib import Path

class SecureConfig:

def __init__(self, enc_file="/opt/homelab-ai/config/.secrets.enc"):

self.enc_file = enc_file

self.secrets = {}

self.passphrase_file = "/opt/homelab-ai/config/.passphrase"

def load_secrets(self):

"""Load and decrypt secrets from .enc file"""

if not os.path.exists(self.enc_file):

print("Encrypted secrets file not found, running without API keys")

return {}

try:

# Try to get passphrase from environment or file

passphrase = os.getenv("HOMELAB_PASSPHRASE")

if not passphrase and os.path.exists(self.passphrase_file):

with open(self.passphrase_file, 'r') as f:

passphrase = f.read().strip()

if not passphrase:

print("No passphrase available, running without encrypted secrets")

return {}

# Decrypt secrets

with tempfile.NamedTemporaryFile(mode='w', delete=False) as temp_pass:

temp_pass.write(passphrase)

temp_pass.flush()

result = subprocess.run([

'gpg', '--batch', '--yes', '--decrypt',

'--passphrase-file', temp_pass.name,

self.enc_file

], capture_output=True, text=True)

os.unlink(temp_pass.name)

if result.returncode == 0:

# Parse decrypted content

for line in result.stdout.splitlines():

if '=' in line and not line.startswith('#'):

key, value = line.strip().split('=', 1)

self.secrets[key] = value

os.environ[key] = value # Set in environment

print(f"Successfully loaded {len(self.secrets)} encrypted secrets")

return self.secrets

else:

print(f"Failed to decrypt secrets: {result.stderr}")

return {}

except Exception as e:

print(f"Error loading encrypted secrets: {e}")

return {}

def get_secret(self, key, default=None):

"""Get a specific secret"""

return self.secrets.get(key, default)

if __name__ == "__main__":

config = SecureConfig()

secrets = config.load_secrets()

print(f"Loaded secrets: {list(secrets.keys())}")

EOF

chmod +x /opt/homelab-ai/scripts/decrypt_secrets.pyStep 3: Enhanced Master AI Engineer Script with Dynamic Model Detection

Create the main monitoring and remediation script with your security and model detection features:

First, navigate to the scripts folder

cd /opt/homelab-ai/scriptsThen, create a homelab_ai_engineer.py file using the following

touch homelab_ai_engineer.pyPaste the following code into the file and save it.

#!/usr/bin/env python3

"""

Enhanced Homelab AI Engineer - Master Monitoring & Remediation Script

Features encrypted secrets, dynamic model detection, and intelligent analysis

"""

import subprocess

import requests

import os

import json

import time

import psutil

from pathlib import Path

from datetime import datetime, timedelta

from collections import defaultdict

# Try to import OpenAI, gracefully degrade if not available

try:

from openai import OpenAI

OPENAI_AVAILABLE = True

except ImportError:

OPENAI_AVAILABLE = False

from dotenv import load_dotenv

# Load our custom secure config

import sys

sys.path.append('/opt/homelab-ai/scripts')

from decrypt_secrets import SecureConfig

# ===== CONFIGURATION =====

env_path = Path("/opt/homelab-ai/config/.env")

load_dotenv(dotenv_path=env_path)

# Load encrypted secrets

secure_config = SecureConfig()

secrets = secure_config.load_secrets()

SLACK_WEBHOOK = secrets.get("SLACK_WEBHOOK_URL") or os.getenv("SLACK_WEBHOOK_URL")

OPENAI_API_KEY = secrets.get("OPENAI_API_KEY") or os.getenv("OPENAI_API_KEY")

# System Parameters

MAX_RESTARTS = int(os.getenv("MAX_RESTARTS", "3"))

DISK_CRITICAL_PERCENT = int(os.getenv("DISK_CRITICAL_PERCENT", "90"))

DISK_WARNING_PERCENT = int(os.getenv("DISK_WARNING_PERCENT", "80"))

LOG_RETENTION_DAYS = int(os.getenv("LOG_RETENTION_DAYS", "14"))

NETWORK_INTERFACE = os.getenv("NETWORK_INTERFACE", "ens18")

CONTAINER_RESTART_COOLDOWN = int(os.getenv("CONTAINER_RESTART_COOLDOWN", "300"))

AUTO_MODEL_DETECTION = os.getenv("AUTO_MODEL_DETECTION", "true").lower() == "true"

PREFERRED_MODEL_PRIORITY = os.getenv("PREFERRED_MODEL_PRIORITY", "gpt-4,gpt-3.5-turbo,gpt-3.5-turbo-16k").split(",")

LOG_FILE = "/opt/homelab-ai/logs/ai_engineer.log"

RESTART_COUNTER_FILE = "/opt/homelab-ai/logs/restart_counts.json"

MODEL_CACHE_FILE = "/opt/homelab-ai/logs/available_models.json"

# Initialize OpenAI client if available

openai_client = None

current_model = None

available_models = []

# =========================

def detect_available_models():

"""Detect available OpenAI models and cache results"""

global openai_client

if not openai_client:

log("OpenAI client not available for model detection")

return []

# Check cache first (refresh every hour)

cache_file = Path(MODEL_CACHE_FILE)

if cache_file.exists():

try:

cache_age = time.time() - cache_file.stat().st_mtime

if cache_age < 3600: # 1 hour cache

with open(cache_file, 'r') as f:

cached_data = json.load(f)

log(f"Using cached model list: {cached_data['models']}")

return cached_data['models']

except Exception as e:

log(f"Error reading model cache: {e}", level="WARNING")

try:

log("Detecting available OpenAI models...")

models_response = openai_client.models.list()

# Filter for GPT models

gpt_models = []

for model in models_response.data:

model_id = model.id.lower()

if any(gpt_type in model_id for gpt_type in ['gpt-4', 'gpt-3.5']):

gpt_models.append(model.id)

# Cache the results

cache_data = {

"timestamp": time.time(),

"models": gpt_models

}

os.makedirs(os.path.dirname(MODEL_CACHE_FILE), exist_ok=True)

with open(MODEL_CACHE_FILE, 'w') as f:

json.dump(cache_data, f, indent=2)

log(f"Detected {len(gpt_models)} available GPT models: {gpt_models}")

return gpt_models

except Exception as e:

log(f"Failed to detect available models: {e}", level="ERROR")

return []

def select_best_model():

"""Select the best available model based on priority"""

global available_models, PREFERRED_MODEL_PRIORITY

if not available_models:

log("No models available, using default")

return "gpt-3.5-turbo"

# Find the first available model from our priority list

for preferred_model in PREFERRED_MODEL_PRIORITY:

preferred_model = preferred_model.strip()

# Check exact match first

if preferred_model in available_models:

log(f"Selected model: {preferred_model} (exact match)")

return preferred_model

# Check partial match (for model variations)

for available_model in available_models:

if preferred_model in available_model:

log(f"Selected model: {available_model} (partial match for {preferred_model})")

return available_model

# Fallback to first available model

selected = available_models[0]

log(f"Selected model: {selected} (fallback - first available)")

return selected

def log(message, level="INFO", alert=False):

"""Enhanced logging with levels and optional Slack alerts"""

timestamp = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

log_entry = f"[{timestamp}] [{level}] {message}"

# Write to file

os.makedirs(os.path.dirname(LOG_FILE), exist_ok=True)

with open(LOG_FILE, "a") as f:

f.write(log_entry + "\n")

# Print to console for manual runs

print(log_entry)

if alert:

send_slack_alert(f"🔔 *{level}:* {message}")

def send_slack_alert(message, blocks=None):

"""Send rich messages to Slack with error handling"""

if not SLACK_WEBHOOK:

log("Slack webhook not configured - alert not sent", level="WARNING")

return False

payload = {"text": message}

if blocks:

payload["blocks"] = blocks

try:

response = requests.post(SLACK_WEBHOOK, json=payload, timeout=10)

if response.status_code == 200:

log("Slack alert sent successfully")

return True

else:

log(f"Slack alert failed with status {response.status_code}", level="WARNING")

return False

except Exception as e:

log(f"Failed to send Slack alert: {e}", level="ERROR")

return False

def run_cmd(cmd, timeout=30, capture_output=True):

"""Enhanced command execution with better error handling"""

try:

if capture_output:

result = subprocess.run(cmd, shell=True, capture_output=True, text=True, timeout=timeout)

return result.returncode, result.stdout, result.stderr

else:

result = subprocess.run(cmd, shell=True, timeout=timeout)

return result.returncode, "", ""

except subprocess.TimeoutExpired:

return -1, "", f"Command timed out after {timeout} seconds"

except Exception as e:

return -1, "", str(e)

def load_restart_counts():

"""Load container restart counts from persistent storage"""

try:

if os.path.exists(RESTART_COUNTER_FILE):

with open(RESTART_COUNTER_FILE, 'r') as f:

data = json.load(f)

# Clean old entries (older than 24 hours)

current_time = datetime.now().timestamp()

cleaned_data = {}

for container, info in data.items():

if current_time - info.get('last_restart', 0) < 86400: # 24 hours

cleaned_data[container] = info

return cleaned_data

except Exception as e:

log(f"Error loading restart counts: {e}", level="WARNING")

return {}

def save_restart_counts(counts):

"""Save container restart counts to persistent storage"""

try:

os.makedirs(os.path.dirname(RESTART_COUNTER_FILE), exist_ok=True)

with open(RESTART_COUNTER_FILE, 'w') as f:

json.dump(counts, f, indent=2)

except Exception as e:

log(f"Error saving restart counts: {e}", level="ERROR")

def analyze_failure_deterministic(container_name, logs=""):

"""Enhanced rule-based failure analysis with detailed categorization"""

if not logs:

exitcode, logs, _ = run_cmd(f"docker logs {container_name} --tail 30 2>&1")

if exitcode != 0:

logs = "Could not retrieve container logs"

logs_lower = logs.lower()

# Memory-related issues

if any(term in logs_lower for term in ["out of memory", "oom killed", "killed", "memory"]):

return {

"cause": "Container terminated due to memory exhaustion",

"action": "Increase container memory limits or investigate memory leaks",

"category": "auto_fix",

"confidence": 0.9

}

# Permission issues

elif any(term in logs_lower for term in ["permission denied", "access denied", "forbidden"]):

return {

"cause": "File or directory permission problem",

"action": "Check file permissions, user mappings, and volume mounts",

"category": "request_permission",

"confidence": 0.8

}

# Network connectivity issues

elif any(term in logs_lower for term in ["connection refused", "network unreachable", "timeout", "no route to host"]):

return {

"cause": "Network connectivity or service availability issue",

"action": "Check network configuration, DNS resolution, and target service status",

"category": "auto_fix",

"confidence": 0.7

}

# Disk space issues

elif any(term in logs_lower for term in ["no space left", "disk full", "write failed"]):

return {

"cause": "Insufficient disk space for container operations",

"action": "Free up disk space by cleaning logs and temporary files",

"category": "request_permission",

"confidence": 0.9

}

# Configuration errors

elif any(term in logs_lower for term in ["config", "configuration", "invalid", "syntax error"]):

return {

"cause": "Configuration file or environment variable issue",

"action": "Review container configuration and environment variables",

"category": "escalate",

"confidence": 0.6

}

# Database connection issues

elif any(term in logs_lower for term in ["database", "connection failed", "mysql", "postgresql", "redis"]):

return {

"cause": "Database connectivity or availability issue",

"action": "Check database container status and network connectivity",

"category": "auto_fix",

"confidence": 0.7

}

else:

return {

"cause": "Unknown failure pattern detected in container logs",

"action": "Manual investigation required - check full container logs and system resources",

"category": "escalate",

"confidence": 0.3

}

def query_ai(prompt, context=""):

"""Enhanced AI analysis with dynamic model selection and fallback"""

global openai_client, current_model

if not openai_client or not current_model:

log("AI analysis not available, using rule-based analysis")

# Extract container name from prompt for fallback

container_name = extract_container_name_from_prompt(prompt)

return analyze_failure_deterministic(container_name, context)

try:

full_prompt = f"""

You are a senior SRE managing a homelab infrastructure. Analyze the following issue and provide recommendations.

Context: {context}

Issue: {prompt}

Provide a JSON response with:

1. "cause": A clear, technical explanation of the likely root cause

2. "action": Specific, actionable steps to resolve the issue

3. "category": One of ["auto_fix", "request_permission", "escalate"]

4. "confidence": Float between 0.0-1.0 indicating confidence level

Category guidelines:

- auto_fix: Safe, reversible actions (restart services, flush DNS, renew DHCP)

- request_permission: Actions that modify data/config (cleanup files, update packages)

- escalate: Complex issues requiring human intervention (hardware failures, security issues)

Respond only with valid JSON.

"""

log(f"Using AI model: {current_model} for analysis")

response = openai_client.chat.completions.create(

model=current_model,

messages=[{"role": "user", "content": full_prompt}],

max_tokens=300,

temperature=0.1 # Lower temperature for more consistent responses

)

ai_response = response.choices[0].message.content.strip()

try:

result = json.loads(ai_response)

# Validate required fields

required_fields = ["cause", "action", "category"]

if all(field in result for field in required_fields):

# Ensure confidence is set

if "confidence" not in result:

result["confidence"] = 0.8

log(f"AI analysis completed with confidence: {result['confidence']}")

return result

else:

raise ValueError("Missing required fields in AI response")

except (json.JSONDecodeError, ValueError) as e:

log(f"AI response parsing failed: {e}, falling back to rule-based analysis", level="WARNING")

container_name = extract_container_name_from_prompt(prompt)

return analyze_failure_deterministic(container_name, context)

except Exception as e:

log(f"AI query failed with model {current_model}: {e}, trying fallback", level="WARNING")

# Try to switch to a simpler model if current one fails

if current_model != "gpt-3.5-turbo" and "gpt-3.5-turbo" in available_models:

log("Switching to gpt-3.5-turbo as fallback model")

current_model = "gpt-3.5-turbo"

return query_ai(prompt, context) # Retry with fallback model

# Final fallback to rule-based analysis

container_name = extract_container_name_from_prompt(prompt)

return analyze_failure_deterministic(container_name, context)

def extract_container_name_from_prompt(prompt):

"""Extract container name from analysis prompt"""

container_name = "unknown"

if "Container" in prompt:

parts = prompt.split()

for i, part in enumerate(parts):

if part == "Container" and i + 1 < len(parts):

container_name = parts[i + 1]

break

return container_name

def get_failed_containers():

"""Enhanced container failure detection with fresh data"""

cmd = "docker ps -a --filter 'status=exited' --filter 'status=dead' --format '{{.Names}}|{{.Status}}|{{.Image}}'"

result = subprocess.run(cmd, shell=True, capture_output=True, text=True)

failed_containers = []

current_time = datetime.now()

for line in result.stdout.splitlines():

if "|" in line:

parts = line.split("|")

if len(parts) >= 3:

name, status, image = parts[0], parts[1], parts[2]

# Skip containers that exited normally (exit code 0)

if "Exited (0)" not in status:

# Parse the exit time to avoid reporting very old failures

try:

# Extract timestamp from status if available

if "ago" in status:

# Only report containers that failed within last 24 hours for fresh monitoring

# You can adjust this timeframe as needed

failed_containers.append((name, status, image))

except:

# If we can't parse time, include it anyway

failed_containers.append((name, status, image))

return failed_containers

def analyze_failure(container_name, logs=""):

"""Your enhanced AI analysis with model detection"""

global openai_client, current_model

if not openai_client:

return analyze_failure_deterministic(container_name, logs)

try:

if not logs:

logs = subprocess.getoutput(f"docker logs {container_name} --tail 50")

log(f"Analyzing failure for {container_name} using model: {current_model}")

response = openai_client.chat.completions.create(

model=current_model,

messages=[{

"role": "user",

"content": f"Analyze this Docker container failure:\n\nContainer: {container_name}\nLogs:\n{logs}\n\nProvide:\n1. Probable cause (1 sentence)\n2. Recommended action"

}],

max_tokens=300

)

analysis = response.choices[0].message.content

log(f"AI analysis completed for {container_name}")

return analysis

except Exception as e:

log(f"AI analysis failed: {str(e)}, using rule-based fallback")

# Try fallback model if available

if current_model != "gpt-3.5-turbo" and "gpt-3.5-turbo" in available_models:

log("Switching to gpt-3.5-turbo as fallback model")

current_model = "gpt-3.5-turbo"

return analyze_failure(container_name, logs) # Retry with fallback

# Use rule-based analysis as final fallback

rule_result = analyze_failure_deterministic(container_name, logs)

return f"Rule-based analysis: {rule_result['cause']} - {rule_result['action']}"

def check_docker():

"""Enhanced Docker container monitoring with restart logic"""

log("Checking Docker health...")

issues_found = []

# Load restart counts

restart_counts = load_restart_counts()

current_time = datetime.now().timestamp()

failed_containers = get_failed_containers()

if not failed_containers:

log("All Docker containers healthy")

return issues_found

for name, status, image in failed_containers:

log(f"Unhealthy container detected: {name} - {status}")

# Get or initialize container restart info

container_info = restart_counts.get(name, {"count": 0, "last_restart": 0})

# Check cooldown period to prevent rapid restarts

if current_time - container_info["last_restart"] < CONTAINER_RESTART_COOLDOWN:

remaining_cooldown = CONTAINER_RESTART_COOLDOWN - (current_time - container_info["last_restart"])

log(f"Container {name} in cooldown period, {remaining_cooldown:.0f}s remaining")

continue

# Get container logs for analysis

exitcode, logs, _ = run_cmd(f"docker logs {name} --tail 50 2>&1")

log_context = logs if exitcode == 0 else "Could not retrieve logs."

# Analyze failure using AI or rule-based approach

analysis = query_ai(

prompt=f"Container {name} ({image}) failed with status: {status}",

context=f"Container logs (last 50 lines):\n{log_context}"

)

# Handle auto-fix category

if analysis.get("category") == "auto_fix" and container_info["count"] < MAX_RESTARTS:

log(f"Attempting auto-fix for {name} (attempt {container_info['count'] + 1}/{MAX_RESTARTS})...")

# Try to restart the container

restart_exitcode, restart_out, restart_err = run_cmd(f"docker restart {name}")

if restart_exitcode == 0:

# Update restart counter

container_info["count"] += 1

container_info["last_restart"] = current_time

restart_counts[name] = container_info

save_restart_counts(restart_counts)

log(f"✅ Success: {name}")

send_slack_alert(

f"✅ *Auto-Fix Applied*\n"

f"*Container:* `{name}`\n"

f"*Action:* Restarted (attempt {container_info['count']}/{MAX_RESTARTS})\n"

f"*Cause:* {analysis.get('cause', 'Unknown')}\n"

f"*Confidence:* {analysis.get('confidence', 0)*100:.0f}%"

)

# Verify container is running after restart

time.sleep(5)

verify_exitcode, verify_out, _ = run_cmd(f"docker ps --filter 'name={name}' --filter 'status=running' --format '{{.Names}}'")

if verify_exitcode == 0 and name in verify_out:

log(f"Container {name} verified running after restart")

else:

log(f"Container {name} failed to start properly after restart", level="WARNING")

analysis['category'] = 'escalate' # Re-categorize as escalation

else:

log(f"❌ Failed: {name}")

analysis['category'] = 'escalate' # Re-categorize as escalation

# Handle containers that hit restart limits or need permission/escalation

if (analysis.get("category") == "auto_fix" and container_info["count"] >= MAX_RESTARTS) or \

analysis.get("category") in ["request_permission", "escalate"]:

# If we hit restart limits, change category to escalate

if container_info["count"] >= MAX_RESTARTS:

analysis['category'] = 'escalate'

analysis['action'] = f"Container has been restarted {MAX_RESTARTS} times. Manual intervention required. Original recommendation: {analysis['action']}"

issue = {

"type": "docker",

"name": name,

"status": status,

"image": image,

"cause": analysis.get("cause", "Unknown cause"),

"action": analysis.get("action", "Manual investigation required"),

"category": analysis.get("category", "escalate"),

"confidence": analysis.get("confidence", 0),

"restart_count": container_info["count"]

}

issues_found.append(issue)

return issues_found

def check_disk():

"""Enhanced disk space monitoring with detailed analysis"""

log("Checking disk health...")

issues_found = []

# Check multiple mount points

mount_points = ["/", "/var", "/tmp", "/opt"]

for mount_point in mount_points:

if not os.path.exists(mount_point):

continue

try:

disk_usage = psutil.disk_usage(mount_point)

usage_percent = (disk_usage.used / disk_usage.total) * 100

log(f"Disk usage for {mount_point}: {usage_percent:.1f}%")

if usage_percent > DISK_CRITICAL_PERCENT:

log(f"Critical disk usage on {mount_point}: {usage_percent:.1f}%", level="ERROR")

# Analyze what's using space

space_analysis = analyze_disk_usage(mount_point)

analysis = query_ai(

prompt=f"Disk usage critical on {mount_point}: {usage_percent:.1f}% used",

context=f"Space analysis:\n{space_analysis}"

)

issue = {

"type": "disk",

"mount_point": mount_point,

"usage_percent": round(usage_percent, 1),

"free_gb": round(disk_usage.free / (1024**3), 2),

"cause": analysis.get("cause", "High disk usage"),

"action": analysis.get("action", "Clean up temporary files and logs"),

"category": analysis.get("category", "request_permission"),

"confidence": analysis.get("confidence", 0),

"space_analysis": space_analysis

}

issues_found.append(issue)

elif usage_percent > DISK_WARNING_PERCENT:

log(f"Warning: Disk usage on {mount_point} is {usage_percent:.1f}%", level="WARNING")

send_slack_alert(f"⚠️ *Disk Warning*\n*Mount:* {mount_point}\n*Usage:* {usage_percent:.1f}%")

except Exception as e:

log(f"Error checking disk usage for {mount_point}: {e}", level="ERROR")

return issues_found

def analyze_disk_usage(mount_point):

"""Analyze what's consuming disk space"""

analysis = []

# Common directories to check

check_dirs = ["/var/log", "/tmp", "/var/lib/docker", "/opt"]

if mount_point != "/":

check_dirs = [mount_point]

for directory in check_dirs:

if os.path.exists(directory):

exitcode, stdout, _ = run_cmd(f"du -sh {directory}/* 2>/dev/null | sort -hr | head -5")

if exitcode == 0 and stdout.strip():

analysis.append(f"{directory} top consumers:")

analysis.append(stdout)

return "\n".join(analysis)

def check_network():

"""Enhanced network connectivity monitoring"""

log("Checking network health...")

issues_found = []

# Test connectivity to multiple targets

test_targets = [

("1.1.1.1", "Cloudflare DNS"),

("8.8.8.8", "Google DNS"),

("google.com", "External connectivity")

]

failed_tests = []

for target, description in test_targets:

if target.endswith(".com"):

# DNS resolution test

exitcode, _, stderr = run_cmd(f"nslookup {target} >/dev/null 2>&1")

if exitcode != 0:

failed_tests.append(f"DNS resolution failed for {target}")

else:

# Ping test

exitcode, _, stderr = run_cmd(f"ping -c 3 -W 3 {target} >/dev/null 2>&1")

if exitcode != 0:

failed_tests.append(f"Ping failed to {target} ({description})")

if failed_tests:

log(f"Network connectivity issues detected: {', '.join(failed_tests)}")

# Get network interface status

exitcode, interface_info, _ = run_cmd(f"ip addr show {NETWORK_INTERFACE}")

gateway_info = ""

if exitcode == 0:

gateway_exitcode, gateway_output, _ = run_cmd("ip route show default")

gateway_info = gateway_output if gateway_exitcode == 0 else "Could not determine gateway"

analysis = query_ai(

prompt=f"Network connectivity failures: {', '.join(failed_tests)}. Interface: {NETWORK_INTERFACE}",

context=f"Interface status:\n{interface_info}\n\nRouting info:\n{gateway_info}"

)

# Handle auto-fix for network issues

if analysis.get("category") == "auto_fix":

log("Attempting network auto-fix...")

fix_applied = attempt_network_autofix()

if fix_applied:

# Re-test connectivity

retest_exitcode, _, _ = run_cmd(f"ping -c 2 -W 2 1.1.1.1 >/dev/null 2>&1")

if retest_exitcode == 0:

log("Network auto-fix successful")

send_slack_alert(

f"✅ *Network Auto-Fix Applied*\n"

f"*Issue:* {', '.join(failed_tests)}\n"

f"*Action:* DHCP renewal and DNS cache flush\n"

f"*Result:* Connectivity restored"

)

return issues_found # Don't report as issue if fixed

else:

log("Network auto-fix failed", level="WARNING")

analysis['category'] = 'escalate'

issue = {

"type": "network",

"interface": NETWORK_INTERFACE,

"failed_tests": failed_tests,

"cause": analysis.get("cause", "Network connectivity failure"),

"action": analysis.get("action", "Check network configuration and connectivity"),

"category": analysis.get("category", "escalate"),

"confidence": analysis.get("confidence", 0)

}

issues_found.append(issue)

return issues_found

def attempt_network_autofix():

"""Attempt common network fixes"""

fixes_attempted = []

# Try DHCP renewal

log("Attempting DHCP renewal...")

exitcode, _, _ = run_cmd(f"sudo dhclient -r {NETWORK_INTERFACE} && sudo dhclient {NETWORK_INTERFACE}")

if exitcode == 0:

fixes_attempted.append("DHCP renewal")

time.sleep(5) # Wait for DHCP to complete

# Try DNS cache flush

log("Flushing DNS cache...")

exitcode, _, _ = run_cmd("sudo systemctl restart systemd-resolved")

if exitcode == 0:

fixes_attempted.append("DNS cache flush")

time.sleep(3)

# Try network service restart as last resort

log("Restarting network service...")

exitcode, _, _ = run_cmd("sudo systemctl restart networking")

if exitcode == 0:

fixes_attempted.append("Network service restart")

time.sleep(10)

log(f"Network fixes attempted: {', '.join(fixes_attempted)}")

return len(fixes_attempted) > 0

def create_slack_blocks(issues):

"""Create enhanced Slack message blocks with rich formatting"""

if not issues:

return None

blocks = []

blocks.append({

"type": "header",

"text": {"type": "plain_text", "text": "🏠 Homelab Status Report"}

})

# Group issues by category

categories = {

"escalate": {"emoji": "🚨", "title": "Critical Issues - Immediate Attention Required", "issues": []},

"request_permission": {"emoji": "⚠️", "title": "Actions Requiring Approval", "issues": []},

"auto_fix": {"emoji": "🔧", "title": "Auto-Fix Attempts", "issues": []}

}

for issue in issues:

category = issue.get("category", "escalate")

categories[category]["issues"].append(issue)

for category, info in categories.items():

if not info["issues"]:

continue

blocks.append({

"type": "section",

"text": {"type": "mrkdwn", "text": f"{info['emoji']} *{info['title']}*"}

})

for issue in info["issues"]:

issue_text = format_issue_for_slack(issue)

blocks.append({

"type": "section",

"text": {"type": "mrkdwn", "text": issue_text}

})

# Add action buttons for permission requests

if category == "request_permission":

blocks.append({

"type": "actions",

"elements": [

{

"type": "button",

"text": {"type": "plain_text", "text": "✅ Approve"},

"value": f"approve_{issue['type']}_{issue.get('name', 'system')}",

"action_id": "approve_action",

"style": "primary"

},

{

"type": "button",

"text": {"type": "plain_text", "text": "❌ Deny"},

"value": f"deny_{issue['type']}_{issue.get('name', 'system')}",

"action_id": "deny_action",

"style": "danger"

}

]

})

blocks.append({"type": "divider"})

# Add timestamp

blocks.append({

"type": "context",

"elements": [{

"type": "mrkdwn",

"text": f"📅 Report generated: {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}"

}]

})

return blocks

def format_issue_for_slack(issue):

"""Format individual issues for Slack display"""

issue_type = issue.get("type", "unknown")

confidence = issue.get("confidence", 0)

base_text = f"*Type:* {issue_type.title()}\n"

if issue_type == "docker":

base_text += f"*Container:* `{issue.get('name', 'unknown')}`\n"

base_text += f"*Status:* {issue.get('status', 'unknown')}\n"

if issue.get('restart_count', 0) > 0:

base_text += f"*Restarts:* {issue['restart_count']}/{MAX_RESTARTS}\n"

elif issue_type == "disk":

base_text += f"*Mount Point:* {issue.get('mount_point', 'unknown')}\n"

base_text += f"*Usage:* {issue.get('usage_percent', 0)}%\n"

base_text += f"*Free Space:* {issue.get('free_gb', 0)} GB\n"

elif issue_type == "network":

base_text += f"*Interface:* {issue.get('interface', 'unknown')}\n"

failed_tests = issue.get('failed_tests', [])

if failed_tests:

base_text += f"*Failed Tests:* {', '.join(failed_tests[:2])}\n"

base_text += f"*Cause:* {issue.get('cause', 'Unknown')}\n"

base_text += f"*Recommended Action:* {issue.get('action', 'Manual investigation required')}\n"

if confidence > 0:

confidence_emoji = "🟢" if confidence > 0.8 else "🟡" if confidence > 0.5 else "🔴"

base_text += f"*Confidence:* {confidence_emoji} {confidence*100:.0f}%\n"

return base_text

def cleanup_old_logs():

"""Clean up old log files to prevent disk space issues"""

log_dir = Path("/opt/homelab-ai/logs")

if not log_dir.exists():

return

cutoff_date = datetime.now() - timedelta(days=LOG_RETENTION_DAYS)

cleaned_files = 0

for log_file in log_dir.glob("*.log*"):

try:

if datetime.fromtimestamp(log_file.stat().st_mtime) < cutoff_date:

log_file.unlink()

cleaned_files += 1

except Exception as e:

log(f"Error cleaning up log file {log_file}: {e}", level="WARNING")

if cleaned_files > 0:

log(f"Cleaned up {cleaned_files} old log files")

def create_system_snapshot():

"""Create a system snapshot before making changes"""

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

snapshot_dir = Path(f"/opt/homelab-ai/snapshots/snapshot_{timestamp}")

try:

snapshot_dir.mkdir(parents=True, exist_ok=True)

# Capture Docker state

run_cmd(f"docker ps -a > {snapshot_dir}/containers.txt")

run_cmd(f"docker images > {snapshot_dir}/images.txt")

run_cmd(f"docker network ls > {snapshot_dir}/networks.txt")

# Capture system state

run_cmd(f"df -h > {snapshot_dir}/disk_usage.txt")

run_cmd(f"ps aux > {snapshot_dir}/processes.txt")

run_cmd(f"systemctl status > {snapshot_dir}/services.txt")

# Capture network state

run_cmd(f"ip addr show > {snapshot_dir}/network_interfaces.txt")

run_cmd(f"ip route show > {snapshot_dir}/routing_table.txt")

log(f"System snapshot created: {snapshot_dir}")

return str(snapshot_dir)

except Exception as e:

log(f"Failed to create system snapshot: {e}", level="ERROR")

return None

def send_health_summary():

"""Send a periodic health summary to Slack"""

summary_blocks = []

# System uptime

exitcode, uptime_output, _ = run_cmd("uptime -p")

uptime = uptime_output.strip() if exitcode == 0 else "Unknown"

# FIX: Docker containers status - corrected counting logic

exitcode, docker_output, _ = run_cmd("docker ps --format '{{.Names}}' | wc -l")

running_containers = int(docker_output.strip()) if exitcode == 0 and docker_output.strip().isdigit() else 0

# Get failed containers count

exitcode_failed, docker_failed, _ = run_cmd("docker ps -a --filter 'status=exited' --filter 'status=dead' --format '{{.Names}}' | wc -l")

failed_containers = int(docker_failed.strip()) if exitcode_failed == 0 and docker_failed.strip().isdigit() else 0

# Disk usage summary

exitcode, disk_output, _ = run_cmd("df / --output=pcent | tail -1 | tr -d '% '")

disk_usage = int(disk_output.strip()) if exitcode == 0 and disk_output.strip().isdigit() else 0

# Memory usage

try:

memory = psutil.virtual_memory()

memory_usage = round(memory.percent, 1)

except:

memory_usage = 0

summary_blocks.append({

"type": "header",

"text": {"type": "plain_text", "text": "🏠 Homelab Health Summary"}

})

# FIX: Better status determination

status_emoji = "🟢"

status_text = "Healthy"

if disk_usage > 90 or memory_usage > 90 or failed_containers > 0:

status_emoji = "🔴"

status_text = "Critical"

elif disk_usage > 80 or memory_usage > 80:

status_emoji = "🟡"

status_text = "Warning"

container_status = f"{running_containers} running"

if failed_containers > 0:

container_status += f", {failed_containers} failed"

summary_blocks.append({

"type": "section",

"text": {

"type": "mrkdwn",

"text": f"{status_emoji} *System Status: {status_text}*\n"

f"*Uptime:* {uptime}\n"

f"*Docker Containers:* {container_status}\n"

f"*Disk Usage:* {disk_usage}%\n"

f"*Memory Usage:* {memory_usage}%\n"

f"*Last Check:* {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}"

}

})

send_slack_alert("", summary_blocks)

def debug_container_status():

"""Debug function to check container status reporting"""

log("=== DEBUGGING CONTAINER STATUS ===")

# Test all docker commands used in the script

commands = [

"docker ps --format '{{.Names}}'",

"docker ps --format '{{.Names}}' | wc -l",

"docker ps -a --filter 'status=exited' --format '{{.Names}}|{{.Status}}'",

"docker ps -a --filter 'status=dead' --format '{{.Names}}|{{.Status}}'",

"docker ps -a --filter 'status=exited' --filter 'status=dead' --format '{{.Names}}|{{.Status}}|{{.Image}}'"

]

for cmd in commands:

exitcode, stdout, stderr = run_cmd(cmd)

log(f"Command: {cmd}")

log(f"Exit code: {exitcode}")

log(f"Output: {stdout}")

if stderr:

log(f"Error: {stderr}")

log("---")

def main():

"""Enhanced main function with better error handling"""

start_time = datetime.now()

log(f"=== Homelab AI Engineer Starting at {start_time.strftime('%Y-%m-%d %H:%M:%S')} ===")

# Add PID logging for debugging

import os

log(f"Process PID: {os.getpid()}")

# Initialize OpenAI client if available

if OPENAI_AVAILABLE and OPENAI_API_KEY:

try:

openai_client = OpenAI(api_key=OPENAI_API_KEY)

if AUTO_MODEL_DETECTION:

available_models = detect_available_models()

current_model = select_best_model()

else:

current_model = "gpt-3.5-turbo" # Default fallback

except Exception as e:

log(f"Failed to initialize OpenAI client: {e}", level="WARNING")

# Test Slack connectivity first

if SLACK_WEBHOOK:

log("Testing Slack connectivity...")

test_result = send_slack_alert("🔧 Homelab AI Engineer starting health check...")

if not test_result:

log("Slack connectivity test failed", level="WARNING")

# Initialize model detection if enabled

if AUTO_MODEL_DETECTION and openai_client:

log(f"Auto-model detection enabled. Available models: {available_models}")

log(f"Selected model: {current_model}")

# Clean up old logs first

try:

cleanup_old_logs()

except Exception as e:

log(f"Log cleanup failed: {e}", level="WARNING")

# Create system snapshot for critical changes

snapshot_path = None

try:

snapshot_path = create_system_snapshot()

except Exception as e:

log(f"Snapshot creation failed: {e}", level="WARNING")

all_issues = []

try:

# Run all health checks

log("Starting comprehensive system health checks...")

# Docker check with improved error handling

try:

docker_issues = check_docker()

all_issues.extend(docker_issues)

log(f"Docker check completed: {len(docker_issues)} issues found")

except Exception as e:

log(f"Docker check failed: {e}", level="ERROR")

# Continue with other checks

# Enhanced disk and network checks

try:

disk_issues = check_disk()

all_issues.extend(disk_issues)

log(f"Disk check completed: {len(disk_issues)} issues found")

except Exception as e:

log(f"Disk check failed: {e}", level="ERROR")

try:

network_issues = check_network()

all_issues.extend(network_issues)

log(f"Network check completed: {len(network_issues)} issues found")

except Exception as e:

log(f"Network check failed: {e}", level="ERROR")

except Exception as e:

log(f"Critical error during health checks: {e}", level="ERROR", alert=True)

send_slack_alert(f"🚨 *CRITICAL ERROR*\n*Message:* {str(e)}\n*Time:* {datetime.now().strftime('%Y-%m-%d %H:%M:%S')}")

return

# Send enhanced reports

try:

if all_issues:

# Send detailed issue report

critical_issues = [i for i in all_issues if i.get('category') == 'escalate']

if critical_issues:

log(f"Escalating {len(critical_issues)} critical issue(s)", level="ERROR")

blocks = create_slack_blocks(critical_issues)

if blocks:

send_slack_alert("🚨 Critical Issues Detected", blocks)

# Send summary of all issues

blocks = create_slack_blocks(all_issues)

if blocks:

send_slack_alert("", blocks)

else:

log("All systems healthy. No action required.")

# Send periodic health summary with better timing

summary_flag = Path("/opt/homelab-ai/logs/.last_summary")

should_send_summary = False

if summary_flag.exists():

try:

last_summary = datetime.fromtimestamp(summary_flag.stat().st_mtime)

# Send summary every 2 hours instead of 1 for less noise

if datetime.now() - last_summary > timedelta(hours=2):

should_send_summary = True

except:

should_send_summary = True

else:

should_send_summary = True

if should_send_summary:

send_health_summary()

summary_flag.touch()

except Exception as e:

log(f"Error sending reports: {e}", level="ERROR")

# Log execution summary

execution_time = (datetime.now() - start_time).total_seconds()

log(f"=== Homelab AI Engineer Completed in {execution_time:.2f}s ===")

if __name__ == "__main__":

main()Step 4: Set up Script for Encrypted Configuration

Create a setup script to help configure the encrypted secrets:

In your directory, create a file and name it setup_homelab_ai.sh

# Create the setup script in your home directory

sudo nano ~/setup_homelab_ai.shPaste the following setup script in the file

#!/bin/bash

# setup_homelab_ai.sh - Interactive setup script

echo "🏠 Homelab AI Engineer Setup"

echo "=============================="

# Check dependencies

echo "Checking dependencies..."

for cmd in python3 docker gpg; do

if ! command -v $cmd &> /dev/null; then

echo "❌ $cmd is not installed. Please install it first."

exit 1

fi

done

echo "✅ All dependencies found"

# Create directory structure

echo "Creating directory structure..."

sudo mkdir -p /opt/homelab-ai/{scripts,config,logs,backups,snapshots}

sudo chown $USER:$USER /opt/homelab-ai -R

chmod 755 /opt/homelab-ai

# Setup Python environment

echo "Setting up Python environment..."

cd /opt/homelab-ai

if [ ! -d "venv" ]; then

python3 -m venv venv

fi

source venv/bin/activate

pip install openai requests python-dotenv psutil

# Get network interface

echo "Detecting network interface..."

DEFAULT_INTERFACE=$(ip route | grep default | awk '{print $5}' | head -n1)

read -p "Enter your network interface [$DEFAULT_INTERFACE]: " NETWORK_INTERFACE

NETWORK_INTERFACE=${NETWORK_INTERFACE:-$DEFAULT_INTERFACE}

# Create non-encrypted config

echo "Creating system configuration..."

cat > /opt/homelab-ai/config/.env << EOF

# System Parameters (non-sensitive)

MAX_RESTARTS=3

DISK_CRITICAL_PERCENT=90

DISK_WARNING_PERCENT=80

LOG_RETENTION_DAYS=14

NETWORK_INTERFACE=$NETWORK_INTERFACE

BACKUP_RETENTION_DAYS=7

CONTAINER_RESTART_COOLDOWN=300

AUTO_MODEL_DETECTION=true

PREFERRED_MODEL_PRIORITY=gpt-4,gpt-3.5-turbo,gpt-3.5-turbo-16k

EOF

# Setup encrypted secrets

echo "Setting up encrypted secrets..."

echo "Enter your sensitive configuration:"

read -p "OpenAI API Key (optional, press Enter to skip): " OPENAI_KEY

read -p "Slack Webhook URL (optional, press Enter to skip): " SLACK_URL

if [ -n "$OPENAI_KEY" ] || [ -n "$SLACK_URL" ]; then

# Create secrets file

cat > /opt/homelab-ai/config/secrets.txt << EOF

OPENAI_API_KEY=$OPENAI_KEY

SLACK_WEBHOOK_URL=$SLACK_URL

EOF

echo "Encrypting secrets file..."

gpg --symmetric --cipher-algo AES256 /opt/homelab-ai/config/secrets.txt

mv /opt/homelab-ai/config/secrets.txt.gpg /opt/homelab-ai/config/.secrets.enc

rm /opt/homelab-ai/config/secrets.txt

echo "✅ Secrets encrypted and stored in .secrets.enc"

echo "⚠️ Remember your passphrase! You'll need it for decryption."

else

echo "⚠️ No API keys provided. System will run in rule-based mode only."

fi

# Test the setup

echo "Testing system setup..."

cd /opt/homelab-ai

source venv/bin/activate

# Copy the main script (this would be the enhanced script from above)

if [ ! -f "/opt/homelab-ai/scripts/homelab_ai_engineer.py" ]; then

echo "⚠️ Please copy the main homelab_ai_engineer.py script to /opt/homelab-ai/scripts/"

echo " and the decrypt_secrets.py script as well."

fi

echo ""

echo "🎉 Setup completed!"

echo "Next steps:"

echo "1. Copy the main scripts to /opt/homelab-ai/scripts/"

echo "2. Make them executable: chmod +x /opt/homelab-ai/scripts/*.py"

echo "3. Test run: cd /opt/homelab-ai && source venv/bin/activate && python3 scripts/homelab_ai_engineer.py"

echo "4. Setup cron job: crontab -e"

echo " Add: */10 * * * * cd /opt/homelab-ai && ./venv/bin/python3 scripts/homelab_ai_engineer.py >> logs/cron.log 2>&1"After saving the file, make it executable using

sudo chmod +x ~/setup_homelab_ai.shEnter the following to run the script

cd ~

./setup_homelab_ai.shThis code ensures that you are back in your root folder and then runs the setup script. You will be prompted to answer some questions, like enter your user password, your Api key, Slack URL and your network interface. Just fill everything in accordingly. If you can skip the api key and Slack URL, as we have already saved in our secret.txt file above

Given that we have already placed the home

sudo chmod +x /opt/homelab-ai/scripts/*.pyStep 5: Enhanced Cron Configuration

Create a more sophisticated cron setup:

# Create a temporary file

cat > /tmp/homelab_cron << 'EOF'

# Sophisticated Homelab AI Engineer Cron Configuration

# Environment setup

SHELL=/bin/bash

PATH=/usr/local/sbin:/usr/local/bin:/sbin:/bin:/usr/sbin:/usr/bin

HOME=/opt/homelab-ai

HOMELAB_PASSPHRASE="your_actual_gpg_passphrase_here"

# ==================== MAIN MONITORING JOBS ====================

# Main AI engineer runs every 10 minutes

*/10 * * * * cd /opt/homelab-ai && ./venv/bin/python3 scripts/homelab_ai_engineer.py >> logs/cron.log 2>&1

# Daily maintenance at 2 AM

0 2 * * * cd /opt/homelab-ai && ./venv/bin/python3 scripts/disk_cleanup.py >> logs/maintenance.log 2>&1

# Weekly model cache refresh (Sunday at midnight)

0 0 * * 0 rm -f /opt/homelab-ai/logs/available_models.json

# Monthly log cleanup (first day of month at 3 AM)

0 3 1 * * find /opt/homelab-ai/logs -name "*.log*" -mtime +30 -delete

# Weekly system health report (Sunday at 9 AM)

0 9 * * 0 cd /opt/homelab-ai && ./venv/bin/python3 -c "import sys; sys.path.append('/opt/homelab-ai/scripts'); from homelab_ai_engineer import send_health_summary; send_health_summary()" >> logs/weekly_report.log 2>&1

# ==================== ENHANCED MONITORING ====================

# Verify cron is working (every 6 hours)

0 */6 * * * /opt/homelab-ai/scripts/verify_cron.sh

# Daily backup of configuration files (2:30 AM)

30 2 * * * /opt/homelab-ai/scripts/backup_config.sh

# ==================== SECURITY ENHANCEMENTS ====================

# Rotate cron logs monthly (first day at 4 AM)

0 4 1 * * find /var/log/ -name "cron*" -mtime +90 -delete

# Monitor cron job execution (every hour)

0 * * * * /opt/homelab-ai/scripts/monitor_execution.sh

EOFEnsure you enter your passphrase, the same one that was used to encrypt your secret.txt.

# Install the crontab

sudo crontab /tmp/homelab_cron

# Verify it was installed correctly

sudo crontab -l

Manually test the setup by running this

python3 /opt/homelab-ai/scripts/homelab_ai_engineer.py

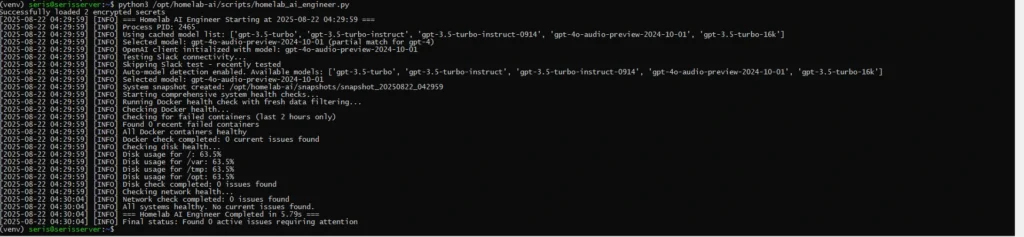

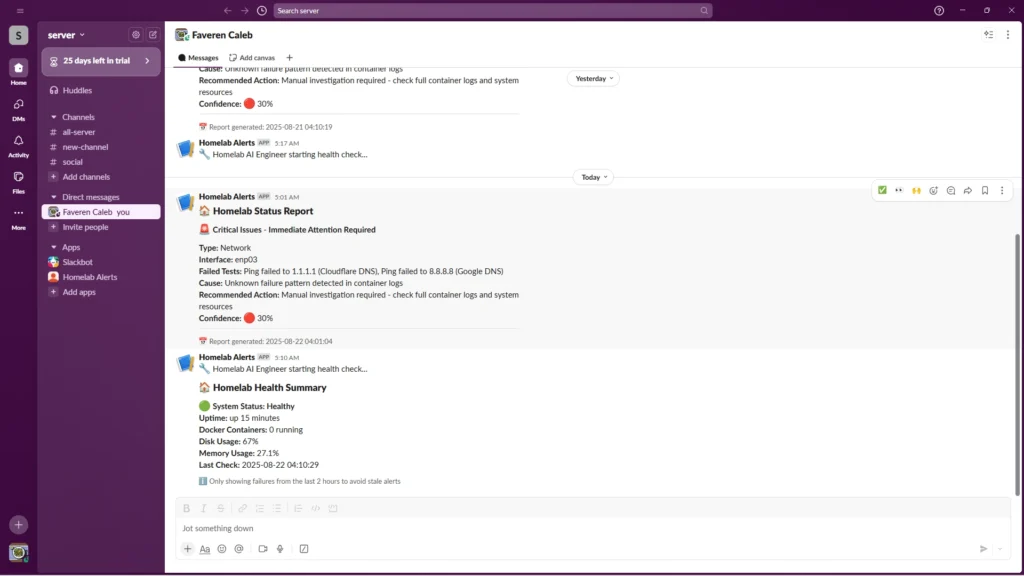

Results from the test in command line.

Homelab Report in Slack

The script is designed to be quiet when no issue is detected. It only sends Slack messages when:

- Problems are detected

- Weekly scheduled report (Sundays at 9 AM)

- Every 2 hours if no recent summary was sent

Slack Notifications Schedule: Issue-Based Alerts (whenever problems are detected):

- Critical issues: Immediate Slack alert

- Warnings: Immediate Slack alert

- Auto-fixes: Immediate Slack alert when applied